AI in research

Artificial intelligence (AI) has become an essential tool in academic environment, accelerating the research process. From literature reviews to data analysis and result preparation, AI automates tasks, offers new analytical possibilities, and enhances data utilization.

Artificial intelligence (AI) has become an essential tool in academic environment, accelerating the research process. From literature reviews to data analysis and result preparation, AI automates tasks, offers new analytical possibilities, and enhances data utilization.

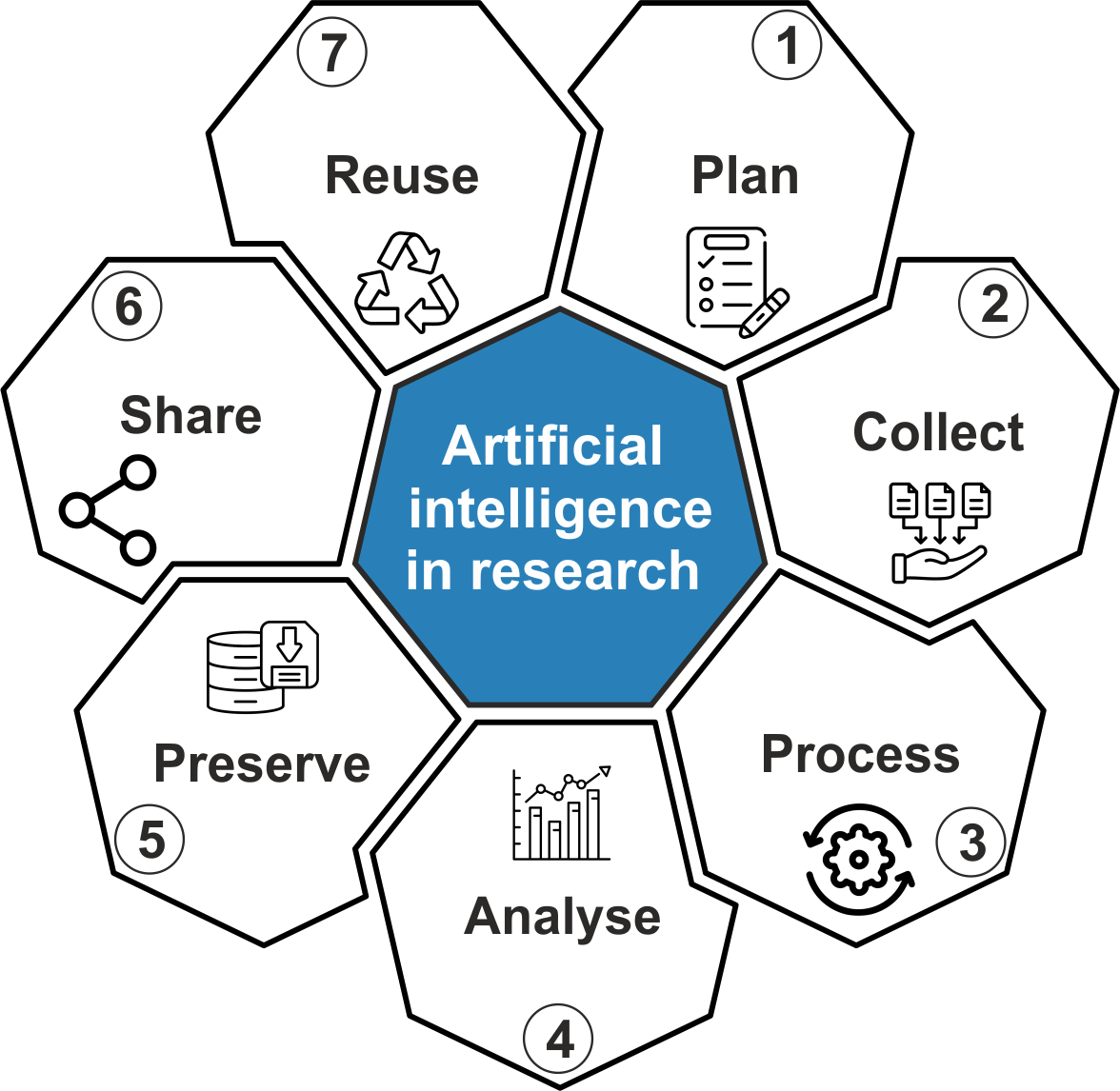

The research process is cyclical and consists of several stages: planning, data collection, processing, analysis, preservation, sharing, and reuse. AI can support this process by:

- conducting rapid literature analysis;

- optimizing data processing and anonymization;

- improving reproducibility and metadata preparation;

- assisting in the writing of scientific papers;

- enhancing research integrity through fraud detection and plagiarism checking.

While AI provides numerous advantages, its use must align with responsible research practices. This includes:

- secure and transparent data management;

- preservation of scientific integrity;

- promotion of reproducibility and openness;

- ensuring responsible AI disclosures in scientific publications.

In this section, you will learn about:

- the application of AI in research – key research tasks that can be facilitated with AI tools;

- ethical and legal aspects of AI usage – data security and privacy protection, maintaining scientific integrity, and ensuring openness and reproducibility.

Artificial intelligence (AI) is increasingly being integrated into research processes, simplifying and accelerating various tasks. Its applications span the entire research cycle – from study planning to data reuse. Below are listed AI tools and their capabilities across different stages of research.

1. Research planning with AI

AI helps systematize literature reviews, design experiments, and prepare research proposals:

- literature analysis – Elicit, Scite, and Research Rabbit quickly find and summarize relevant scientific sources;

- experiment planning – JASP and IBM SPSS Modeler assist in hypothesis formulation and experimental design;

- research proposal writing – ChatGPT, Claude, and SciSpace help structure text and formulate ideas;

- hypothesis generation – HyperWriter’s AI can help identify gaps in research and suggest novel hypotheses.

2. Data collection and processing

AI automates data collection, annotation, and protects sensitive information:

- automated data collection – BeautifulSoup + GPT processes web data;

- text and image annotation – Prodigy and Doccano assist in data labeling and categorization;

- data anonymization – Presidio and OpenDP ensure personal data protection; machine learning-based anonymization techniques offer improved privacy while maintaining data utility.

3. Data analysis and interpretation

AI enhances data analysis, statistical processing, and qualitative data coding:

- big data processing – Google AutoML, IBM Watson Studio, and PyCaret develop and train analytical models;

- statistical analysis – JASP, SmartPLS, and DataRobot identify data correlations;

- thematic coding – NVivo and ATLAS.ti AI Coding facilitate qualitative data analysis;

- bias detection and mitigation – holistic AI tool offers techniques to measure and mitigate bias across various tasks, aiming to enhance the trustworthiness of AI systems.

4. Data storage and management

AI supports organizing and structuring research data according to FAIR principles:

- metadata generation – Dataverse AI plugins and DDI Metadata Standards structure research data;

- data organization – FAIR Assistant and Datalore improve data management;

- file classification and search – Haystack and OpenAI embeddings streamline document management.

5. Research output preparation and dissemination

AI assists in academic writing, visualization, and dissemination:

- manuscript editing – Trinka, Grammarly, and ChatGPT enhance language quality;

- data visualization – Tableau AI, Datawrapper, and Flourish automate graph creation;

- research dissemination – SciSpace, Microsoft Copilot, and TLDRThis generate concise summaries and press releases;

- AI-assisted citation generation and verification – tools – CitationGenerator, SciSpace Citation Generator ensure that citations are accurate and prevent fabricated references.

6. Data and research reuse

AI supports open data analysis, reproducibility, and data sharing:

- open data integration – Google Dataset Search combined with AI models helps locate and analyze additional data;

- reproducibility verification – Code Ocean and Whole Tale ensure reproducibility;

- data sharing – Zenodo enables AI-driven tagging, while Figshare provides AI-assisted research data description and publication.

AI offers vast possibilities in research, but its application requires responsible research practices, ensuring data security, reproducibility, and scientific integrity.

The use of artificial intelligence (AI) in research offers numerous advantages; however, it requires a responsible approach. To ensure that AI tools are used in compliance with academic ethics and legal requirements, it is essential to adhere to the principles of responsible research practices. These include data management and privacy protection, maintaining scientific integrity, and ensuring openness and reproducibility.

All existing ethical and legal requirements remain in force when using AI. Researchers must consider these principles when evaluating the use of a specific AI tool to ensure that it complies with data protection, intellectual property, and scientific integrity standards.

1. Data management and privacy protection

AI use in research often involves processing large volumes of data, including sensitive or personally identifiable information. To comply with privacy protection requirements, the following must be observed:

- data anonymization – tools such as Presidio and OpenDP help ensure that personal data is removed or encrypted;

- AI-driven privacy-preserving models – deep learning-based anonymization methods, such as PriCheXy-Net, help preserve privacy while maintaining data integrity;

- access control – secure data storage and access systems must be used to prevent unauthorized use of information;

- compliance with regulatory requirements – AI use must align with regulatory frameworks, such as the GDPR and other data protection regulations.

Critical risk! Personal data may only be entered and used in AI systems that are explicitly certified for GDPR compliance. Publicly available AI tools (e.g., ChatGPT, Claude) are not secure for processing personal data due to the lack of control over where and how this data is stored.

2. Ensuring scientific integrity

AI can rapidly collect, generate, and analyze information, but its application must be critically assessed to avoid incorrect or misleading results:

- verification of data sources – AI-generated information must be checked for source reliability and adherence to scientific standards;

- prevention of plagiarism and fraud – tools such as Caps, Turnitin and iThenticate should be used to ensure that AI-generated text is not copied from existing sources;

- transparency of AI decisions – it is necessary to explain how AI analysis results are obtained and interpreted to avoid drawing inappropriate conclusions.

Critical risk! Entering unpublished articles and ideas into publicly available AI systems may lead to premature public disclosure. If research results are processed in commercial AI tools, they may become part of training datasets or be leaked beyond the author’s control. Use only internally controlled and secure AI systems!

3. Openness and reproducibility

Research reproducibility and transparency are fundamental academic principles that must also be observed when using AI. However, certain risks may threaten their implementation:

- public availability of data and code – data and algorithms used should be documented and, if possible, made publicly accessible via platforms like Zenodo and Figshare. There is a risk that AI-generated analyses are not sufficiently documented, making reuse and verification difficult;

- documentation of metadata and methodology – AI tools such as Dataverse AI plugins help automatically generate and structure metadata. However, insufficient transparency in AI operations may lead to situations where analytical processes are not clearly reproducible;

- verifiability of results – to ensure AI analysis reproducibility, version control and code management platforms such as Code Ocean should be used. There is a risk that AI models generate results that are difficult to replicate or verify without detailed information on their operation principles and training data.

To mitigate these risks, clear documentation and transparency in the AI tools used must be ensured, as well as adherence to scientific reproducibility standards.

4. Steps to mitigate AI usage risks

To ensure ethical and legally compliant AI use in research, the following principles must be followed:

- evaluate AI tools – ensure that the AI tools used comply with data protection requirements and do not pose a risk of disclosing sensitive information;

- use closed systems – when processing confidential data, opt for institutionally managed AI platforms or local solutions;

- do not input sensitive information into public AI tools – personal data, unpublished research, and confidential materials must not be entered into publicly available AI tools;

- stay informed about AI developments and regulations – AI technologies and regulations are evolving rapidly, making it important to keep up with the latest recommendations and legal requirements.

AI offers significant opportunities in research, but its use must be ethical and legally responsible. Researchers must be vigilant regarding data privacy, information reliability, and adherence to scientific accountability to ensure AI application aligns with best academic practices.

ALLEA | All European Academies. (2023). The European Code of Conduct for Research Integrity – Revised Edition 2023. ALLEA. DOI: 10.26356/ECOC

Asimopoulos D., Siniosoglou I., Argyriou V., Goudos S. K., Psannis K. E., Karditsioti N., Saoulidis T., Sarigiannidis P. (2024). Evaluating the Efficacy of AI Techniques in Textual Anonymization: A Comparative Study. IEEE, 2405.06709v1. Available: https://arxiv.org/abs/2405.06709

European Comission. (2024) Living guidelines on the responsible use of generative AI in research. ERA Forum Stakeholders’ document. European Commission. Available: https://research-and-innovation.ec.europa.eu/document/download/2b6cf7e5-36ac-41cb-aab5-0d32050143dc_en?filename=ec_rtd_ai-guidelines.pdf

Floridi L., Cowls J. (2019). A Unified Framework of Five Principles for AI in Society. Harvard Data Science Review, Issue 1.1., 14 p. DOI: 10.1162/99608f92.8cd550d1

Kocak Z. (2024). Publication Ethics in the Era of Artificial Intelligence. Journal of Korean Medical Science (JKMS), 39(33), e249. DOI: 10.3346/jkms.2024.39.e249

Sáinz-Pardo Díaz J., López García Á. (2024). An Open Source Python Library for Anonymizing Sensitive Data. Scientific Data, 11:1289. DOI: 10.1038/s41597-024-04019-z

Yousaf M. N. (2025). Practical Considerations and Ethical Implications of Using Artificial Intelligence in Writing Scientific Manuscripts. ACG Case Reports Journal, 12, e01629. DOI: 10.14309/crj.0000000000001629